Evaluate an LLM

LLM as judge

Workflows and applications utilizing a large language model (LLM) require a specialized approach to evaluation and monitoring. Since generated text can be unpredictable, it is crucial to identify and filter out unacceptable responses before they reach end users. This includes detecting and mitigating toxic content, as well as addressing social, gender, cultural, and other biases. Without proper evaluation measures, sensitive data and other undesirable content may appear in responses leading to potential business value damage.

To address these challenges, AI Cloud enables the seamless creation of LLM-as-judge evaluators, facilitating more effective assessment of model outputs. Given the diverse use cases for LLM applications, evaluation criteria must be adaptable. Providing access to custom evaluator creation ensures that assessments can be tailored to best fit specific needs and objectives.

Additionally, users can leverage a locally deployed LLM for evaluation, reducing dependency on external providers and optimizing costs.

Our LLM-as-judge approach is model-agnostic, allowing you to choose any evaluator LLM that suits your needs - for example, Prometheus. You can deploy your selected LLM in AI Cloud and use it with a Prompt operator to define the specific evaluation criteria.

Example scenario: Evaluating an LLM-based workflow

Workflow A generates text-based explanations using an LLM. It processes inputs, constructs a prompt, sends it to the LLM, and returns the generated response as output. On the output port of Workflow A, traces can be saved—capturing both the exact prompt sent to the LLM and the corresponding response.

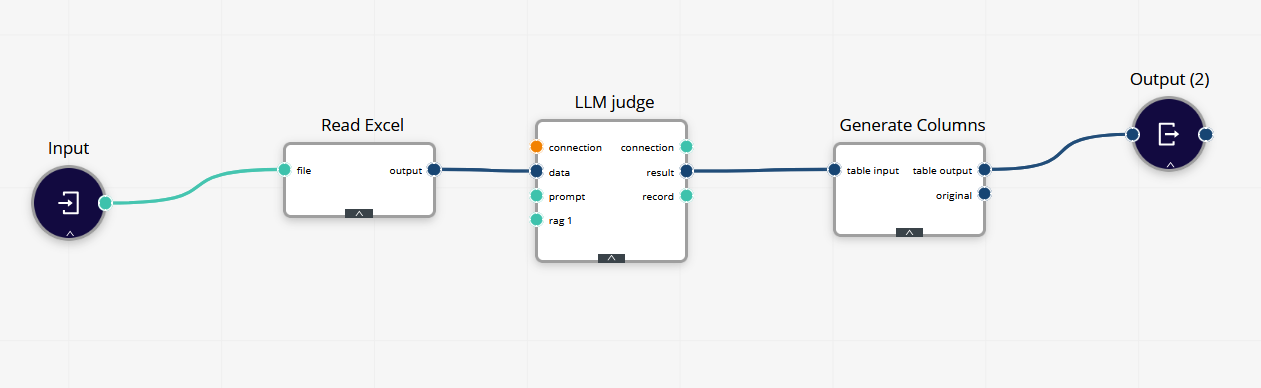

To evaluate Workflow A's performance, an Evaluation Workflow is created using the LLM Judge operator (essentially a prompt-with-data operator). This Evaluation Workflow takes the output of Workflow A as input. Within the operator, an evaluation prompt is defined, and the mapping of the input file that contains Workflow A's traces.

Depending on the evaluation LLM used, you can specify exact scoring criteria and provide an example of an ideal response. The optimal prompt structure may vary across different evaluation models, but you can fine-tune your prompts in the Prompt Designer.

At the output port of the Evaluation Workflow, you'll receive an evaluation score for each input-output pair from Workflow A. This feedback as a dataset can be valuable for fine-tuning the LLM or optimizing prompts within Workflow A later.