AI agent operators

It’s very easy to leverage the power of Generative AI models directly inside Workflow Studio and use them to create AI based agents that can interact with the rest of the AI Cloud platform and of course with the outside world. This page covers how to use Generative AI operators inside Workflow Studio to send prompts to a connected LLM and use them to define AI agents.

Connecting to a model

To work with a generative model, you need to provide a connection to that model. Normally, generative models are deployed by an external service provider like OpenAI or Microsoft Azure OpenAI. The connection contains the information needed to connect to the model. In most cases this is a key:value pair that contains the API key and the model URL. Those credentials are stored in a connection object and managed in the Connections section of each project.

Once the connection object is created, it can be accessed via the assets tab in Workflow Studio and added from there to the workflow. Every operator that uses a generative model has a connection input port. It is also possible to use different models for different operators in the same workflow.

Operator overview

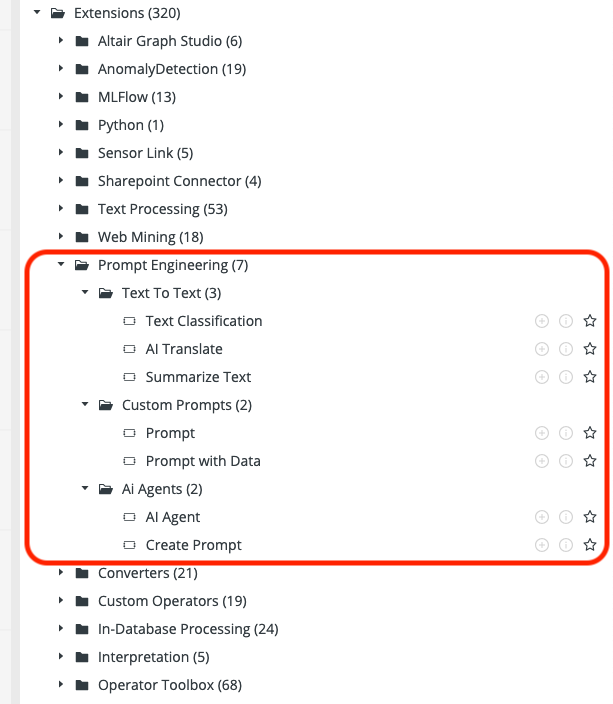

The Generative AI and AI agent operators are bundled in the Prompt Engineering folder that can be found under extensions in the operator panel.

There are three groups of operators for different use cases.

Because all these operators are using the same interface to Large Language Models (LLM), they share a common set of parameters for fine-tuning the models. The shared parameters are explained at the end of this document in an extra section.

Text to Text

One of the most common and iconic tasks for generative models is working with text. There are three pre-configured operators that provide the needed interface to directly tackle text related requests.

Text Classification

The Text Classification operator is used for submitting the contents of the selected nominal column to an LLM for classifying it into the specified categories. It can optionally be enriched with RAG input data for grounding the model and providing additional context.

Input

- Data: This table input port expects the data which contains the column to submit to the LLM for classification.

- Prompt: A pre-configured system prompt that will be used to query the LLM, together with its config. If this optional port is connected, the respective settings of the operator will vanish and the system prompt and settings contained within will be used instead.

- RAG: These optional input ports are used to provide the LLM with reference data (the R in RAG).

Output

- Result: The data table containing the new target column with the classification result, as well as a new score column with the confidence of each classification.

Parameters

- Input Column: The column to classify.

- Categories: The categories to classify the text into.

- Target Column: The name of the new column which should contain the classification result.

AI Translate

The AI Translate operator is used for submitting the content of the selected nominal column to an LLM for translation to the specified target language. It can optionally be enriched with RAG input data for grounding the model and providing additional context.

Input

- Data: This table input port expects the data which contains the column to submit to the LLM for translation.

- Prompt: A preconfigured system prompt that will be used to query the LLM, together with its config. If this optional port is connected, the respective settings of the operator will vanish and the system prompt and settings contained within will be used instead.

- RAG: These optional input ports are used to provide the LLM with reference data (the R in RAG).

Output

- Result: The data table containing the new target column with the translation result.

Parameters

- Input Column: The column which to translate.

- Target Language: The target language to translate into.

- Target Column: The name of the new column which should contain the translation.

Summarize Text

The Summarize Text operator is used for submitting the content of the selected nominal column to an LLM to create a short summary. It can optionally be enriched with RAG input data for grounding the model and providing additional context.

Input

- Data: This table input port expects the data which contains the column to submit to the LLM for a summary.

- Prompt: A preconfigured system prompt that will be used to query the LLM, together with its config. If this optional port is connected, the respective settings of the operator will vanish and the system prompt and settings contained within will be used instead.

- RAG: These optional input ports are used to provide the LLM with reference data (the R in RAG).

Parameters

- Input Column: The column which to summarize.

- Summary Length: The number of sentences the summary should have.

- Target Column: The name of the new column which should contain the summary.

Custom Prompts

The custom prompts operators are the most generic of the LLM operators. They can be used to send arbitrary queries to the provided model and can be customized with very precisely designed prompts. The main differentiator to other available LLM interfaces is the integration with Workflow Studio, so they can be part of a regular workflow and especially can provide compatible data tables as input and output.

Prompt

The Prompt operator is used for submitting user-defined prompts to an LLM. This represents the most general way to interact with an LLM.

Input

- Prompt: A preconfigured prompt that will be used to query the LLM, together with its config. If this optional port is connected, the respective settings of the operator will vanish and the prompt and settings contained within will be used instead.

- RAG: These optional input ports are used to provide the LLM with reference data (the R in RAG). Rag references within the prompt/system prompt are referencing the rag input data at the specified port (starting at 1), and they consist of a set of 2 curly brackets around the ref name, e.g.

{{RAG 1}}. If there are rag ports connected which have no rag ref within the actual prompt, they are each appended as a simple additional message to the LLM to use the provided data as an example.

Output

- Result: The data table containing the prompt result.

- Record: A complete representation of the prompt that was used to query the LLM, including all input prompt messages, the response, as well as config and meta information.

Prompt with Data

The Prompt with Data operator can be used to submit complex prompts to an LLM, enriched with the data of each row from the input data, as well as optionally RAG data references for grounding the LLM and providing additional context. The prompt is applied row-wise and each time replacing placeholders with the actual value from any number of columns within the input data.

Input

- Data: This table input port expects the data which contains the column(s) to enrich the custom prompt for the LLM.

- Prompt: A preconfigured prompt that will be used to query the LLM, together with its config. If this optional port is connected, the respective settings of the operator will vanish and the prompt and settings contained within will be used instead.

- RAG: These optional input ports are used to provide the LLM with reference data (the R in RAG). Rag references within the prompt/system prompt are referencing the rag input data at the specified port (starting at 1), and they consist of a set of 2 curly brackets around the ref name, e.g.

{{RAG 1}}. If there are rag ports connected which have no rag ref within the actual prompt, they are each appended as a simple additional message to the LLM to use the provided data as an example.

Output

- Result: The data table containing the new target column with the custom prompt result.

- Record: A complete representation of ONE of the prompts that was used to query the LLM, including all input prompt messages, the response, as well as config and meta information. Since in this operator an individual prompt is generated for each row, and the prompts are submitted in parallel, the prompt available here is not necessarily the prompt for the first row, but it is the prompt for one of the first rows.

Parameters

- Prompt: The custom prompt with optional row-wise replaced input, RAG, and expected result references, as well as global RAG references (via

{{RAG x}}placeholders) that will be filled in before being sent to the LLM. Column references are referencing the name of a column in the input data, and they consist of a set of 2 square brackets around the column name, e.g.[[Column 1]]. Column RAG references are referencing the name of a column in the input data for context references, and they consist of a set of a square bracket followed by a curly bracket around the column name, e.g.[{Column 1}]. Column expected result references are referencing the name of a column in the input data for expected result references, and they consist of a set of a square bracket followed by a normal bracket around the column name, e.g.[(Column 1)]. In the end, all the three different column references above are simply replaced with the respective column values for each row, the distinction between them is to support Prompt Studio and allows for discerning what part of the prompt placeholders served which purpose. There is also a global reference, which is taken from the operator input ports in full, and not row-wise. These global RAG references are referencing the rag input data at the specified port (starting at 1), and they consist of a set of 2 curly brackets around the ref name, e.g.{{RAG 1}}.

AI agent operators

The Agent operators are very similar to the regular prompt operators above. Their special trait is, that they are configured to interact with the AI Cloud platform and can perform various tasks independently. A detailed description of their features and uses cases can be found on this page.

Both operators can also leverage addition RAG input to provide further information to the agent.

AI Agent

This operator acts as an AI agent within the Altair AI Cloud and can interact with the platform via human language. Since it is backed by an LLM, the exact interactions are determined via reasoning of the LLM. It can access various APIs of the platform that would normally only be available via the UI, and use any deployments available to the current project - giving the user access to defining agentic functions via workflows on their own.

Create Prompt

The Create Prompt operator will task an LLM with creating a new prompt object which can be consumed by other agents and prompt engineering operators.

Parameters for Prompt Engineering

This is a list of shared parameters for all operators of the prompt extension.

They handle the input of additional prompt configurations, the used model provider and the model type. They also expose fine granular options to fine tune the behavior of the model.

- Override Prompt Config: This parameter becomes available in case a Prompt object is provided at the prompt input port. By default, and with this override not being selected, the LLM provider, model, as well as the configuration is taken from the Prompt object. If this override is active, the LLM provider, model, and the configuration is taken from the parameters of this operator instead. This allows to create a prompt with one LLM but use it with a different LLM.

- Provider: The provider of the model.

- Deployment ID: In case of the provider being set to Local AI, this is the ID of the local deployment used for accessing the model specified below.

- Model: The model to submit the prompt to.

- Max Output Tokens: The maximum amount of output tokens that should be returned by the LLM. Note that different LLMs may have different maximum output token capabilities. A token is usually between 2-4 characters, but the exact amount depends on the model, the language, and the content.

- Response Format: The response format of the LLM. Some models may not be able to deliver all available options.

- System Prompt: An optional system prompt, that can be left empty. The system prompt is an initialization prompt which can be sent to the LLM. It is often used to let the LLM use a certain persona or style in answering such as "You are a factual chatbot which prefers short answers."

- Temperature: Controls the randomness used in the answers. Lower values will lead to less random answers. A temperature of 0 represents a fully deterministic model behavior.

- Top P: Controls diversity via nucleus sampling. A value of 0.5 means that half of all likelihood-weighted options would be considered. A value of 1.0 means all options are considered.

- Frequency Penalty: How much to penalize new tokens based on their frequency in the answer so far. Can be used to reduce repetitiveness.

- Presence Penalty: How much to penalize new tokens based on their presence in the answer so far. Increases the model's likeliness to talk about new topics.