Prompt Studio

Prompt engineering overview

Prompt engineering is the practice of designing and refining the input prompts given to AI models, to achieve the desired outputs. It involves crafting clear, specific, and effective prompts that guide the AI to generate accurate, relevant, and high-quality responses. LLMs powered by well-crafted prompts can be used for various data analytics and machine learning tasks - without the need for completeness here are some use cases where they can be an asset:

-

Synthetic data generation when there is insufficient data at hand

-

Detect and extract outliers in a data set

-

Summarize and/or relevant information from structured or unstructured data

-

Predict the future using historical data

-

Translate multi-language user feedbacks to common language

-

Classify the sentiment of a text, e.g. analyze customer feedbacks to determine if the emotional tone of the message is positive, negative, or neutral

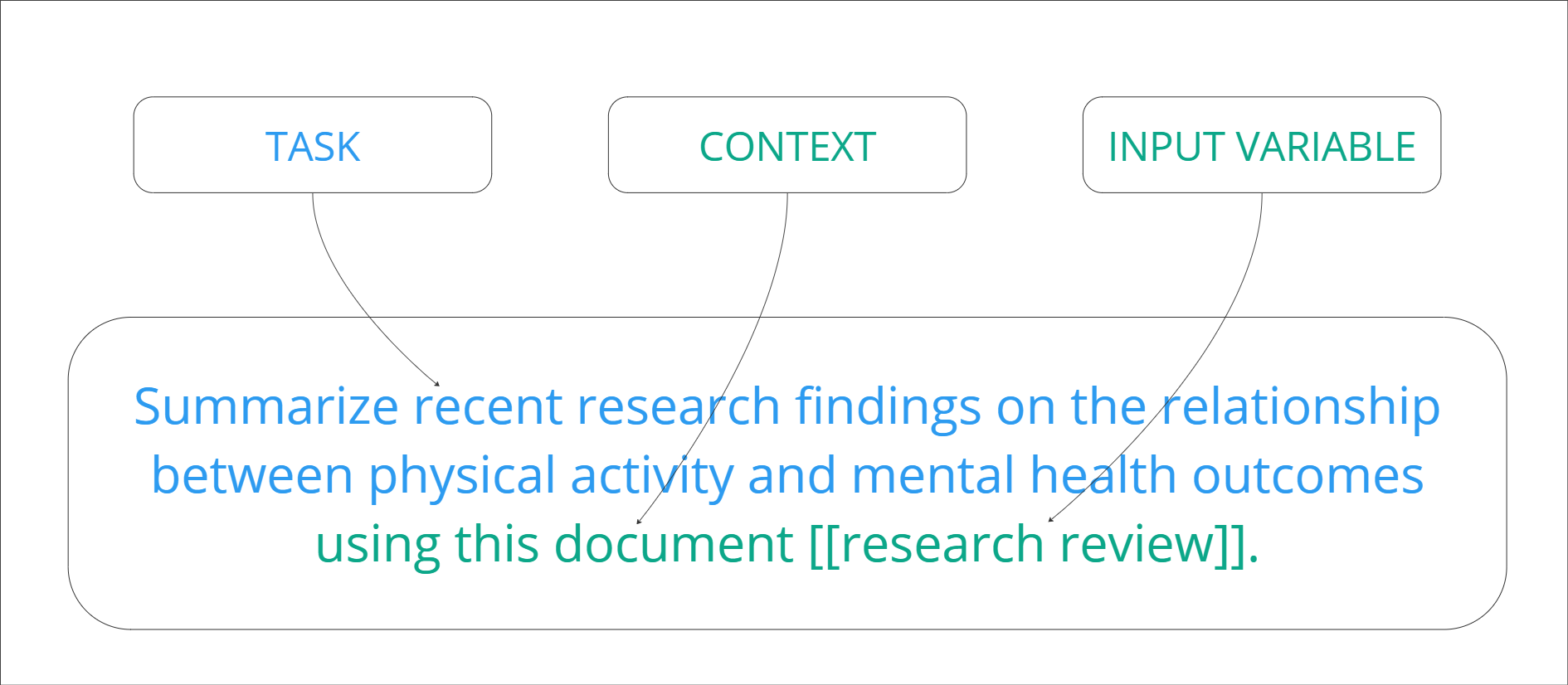

Prompts generally consist of two main parts: task and context. Task is an instruction that tells the LLM what to do, while context provides the data that will be acted upon. Context often includes input variables, which are the raw data or information you want the LLM to process. When using input variables, be explicit in your prompt about what constitutes input data. The variable name will be replaced with the actual data at runtime, and the context may be lost if you don’t clearly define it in the prompt.

Prompt engineering is an iterative process, you should keep experimenting and trying different ways of giving instructions and providing context to find the most ideal response.

How can Prompt Studio help you with prompt engineering?

Prompt Studio gives you the ability to:

-

Interface with LLMs of the most popular providers or use locally deployed versions

-

Test various prompts or prompt - LLM combinations to observe how different models respond

-

Track prompt-to-output relationships during the prompt design process

-

Manually evaluate LLM outputs

-

Compare prompt - LLM combinations based on their responses, latency and other parameters

-

Cross-testing on manually created test cases or on batch of inputs from a defined dataset

-

Apply input variables to create reusable prompt templates that can be included in workflows

-

Use prompts in project workflows

Connect to LLM providers or use deployed open-source models

In order to use LLMs, first you need to create a connection.

In Prompt Studio, we show you the full range of available providers, but you’ll see a warning if there is no connection selected or if the selected one does not support the selected provider. The connection can hold a secret for multiple local deployments, but they are optional - we only show you which deployments you have access to.

Read more: Deploy an LLM

Create a Prompt Studio

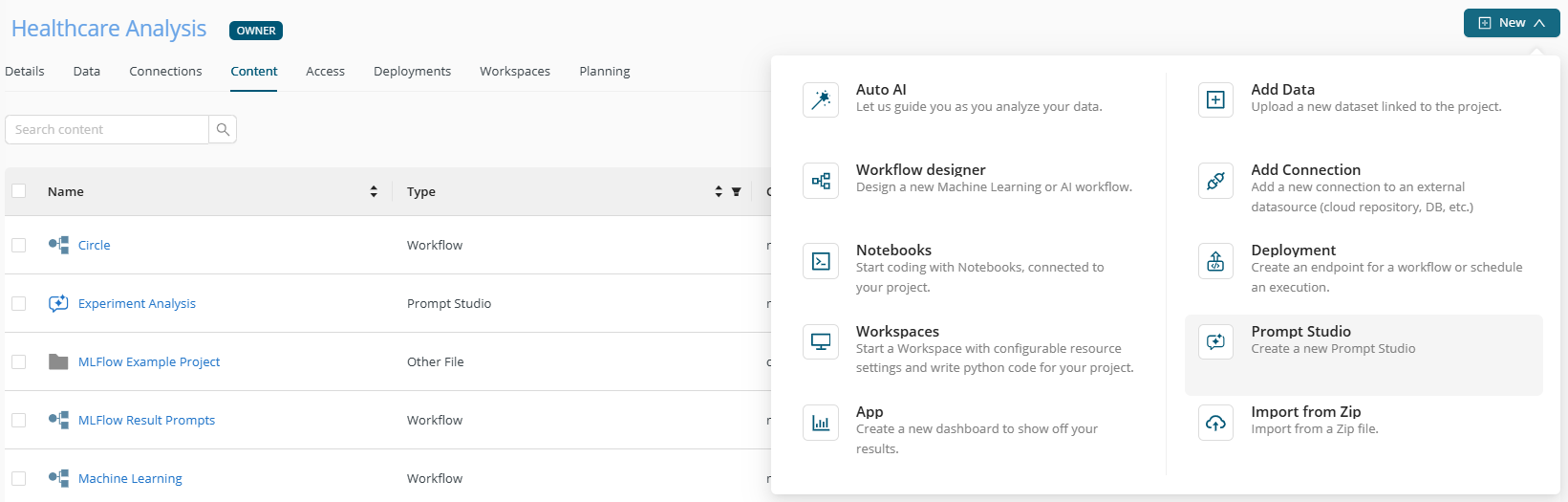

A new Prompt Studio can be created under a project. Each Studio is technically a folder located on the Content tab of a project. They can contain one- or multiple prompts and as such, Prompt Studio doesn’t have a version.

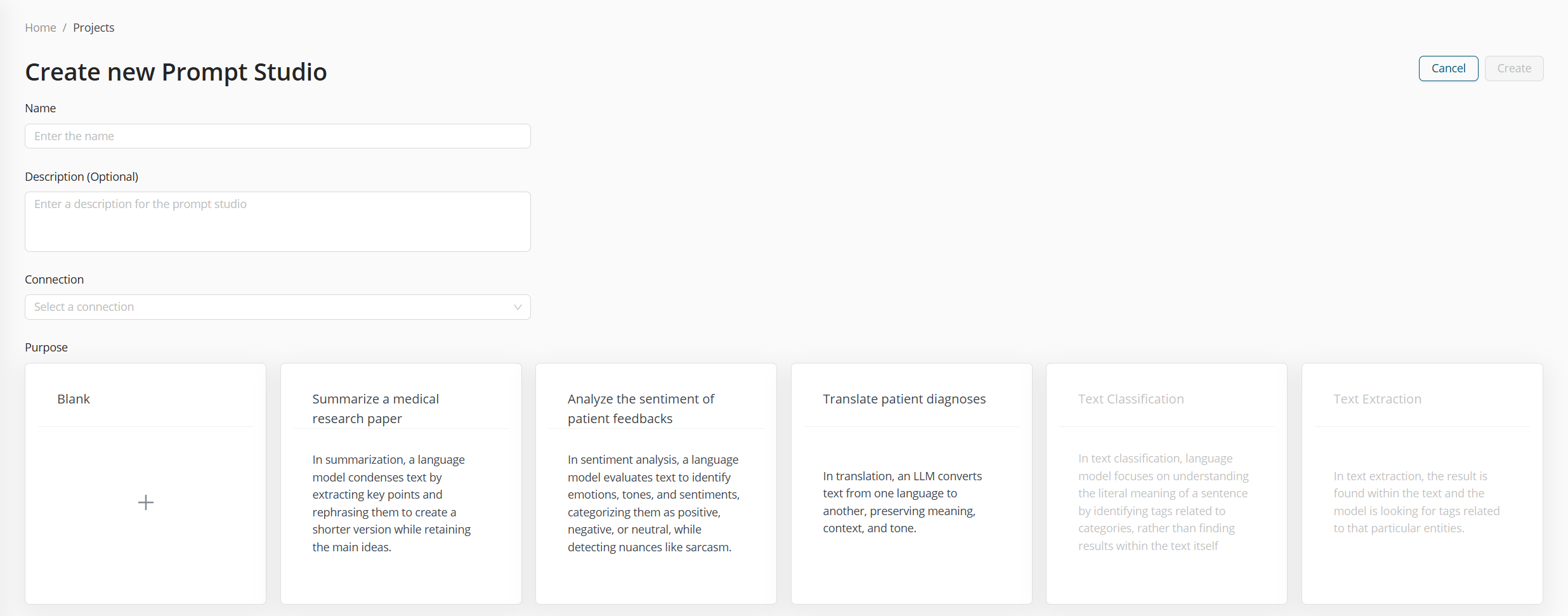

You can assign a unique name to the Studio within the project, add an optional description, select an existing connection, and choose whether to start your prompt engineering with a predefined template or from scratch. Selecting a connection is required, as without it, you won't be able to send requests to the LLMs. If you haven't created a connection yet, don't worry - you can easily navigate to the connection setup page from the Studio creation page.

Prompt templates

We have created a handful of templates for you that you can access while creating a new Prompt Studio under your project. They can serve as a starting point to tailor the prompts to your needs. The use cases are all related to healthcare and they cover the below common LLM use cases:

-

Summarization: In summarization, a language model condenses text by extracting key points and rephrasing them to create a shorter version while retaining the main ideas.

-

Sentiment analysis: In sentiment analysis, a language model evaluates text to identify emotions, tones, and sentiments, categorizing them as positive, negative, or neutral, while detecting nuances like sarcasm.

-

Translation: In translation, an LLM converts text from one language to another, preserving meaning, context, and tone.

-

Text classification: In text classification, language model focuses on understanding the literal meaning of a sentence by identifying tags related to categories, rather than finding results within the text itself.

-

Text extraction: In text extraction, the result is found within the text and the language model is looking for tags related to that particular entities.

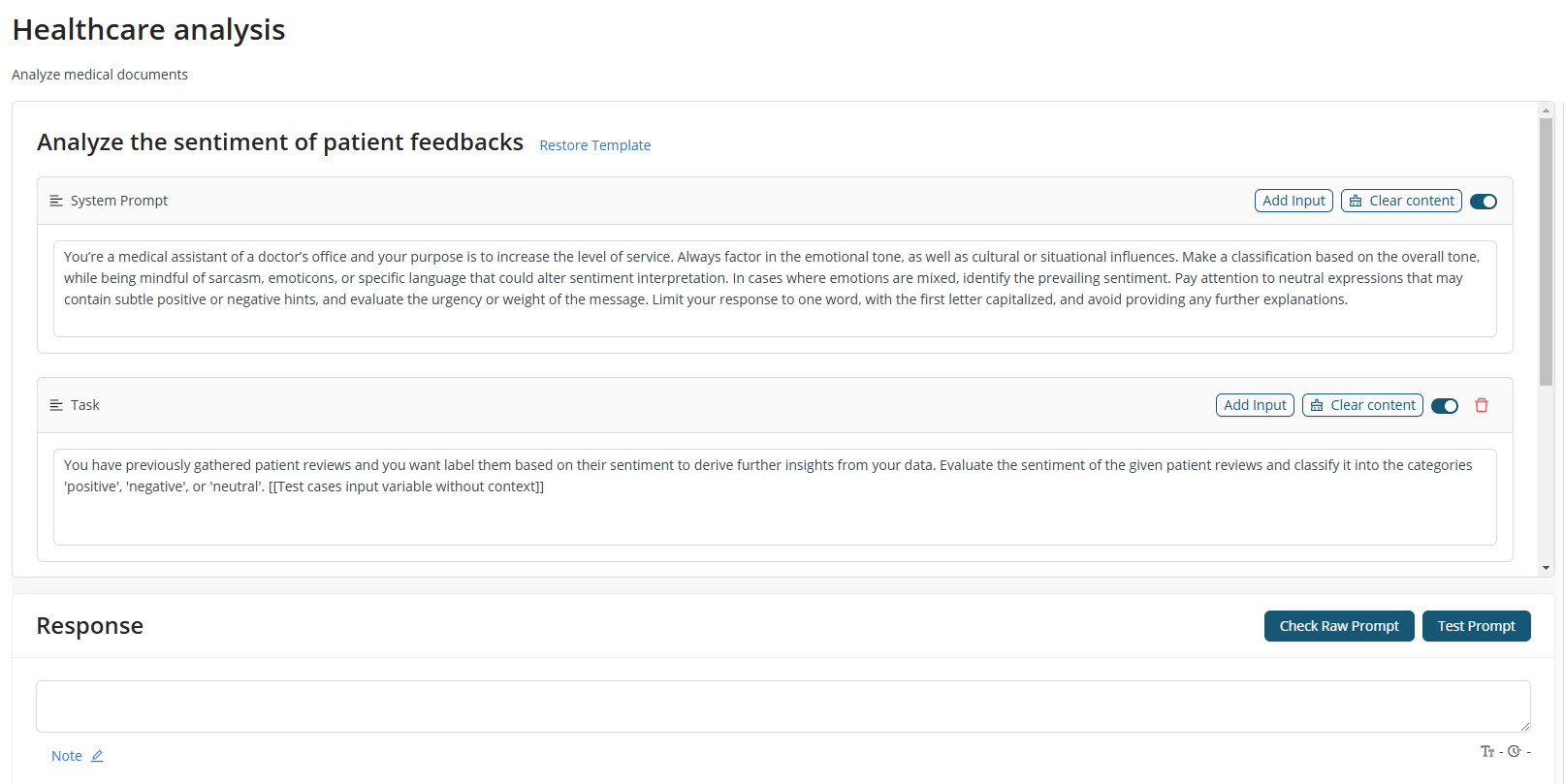

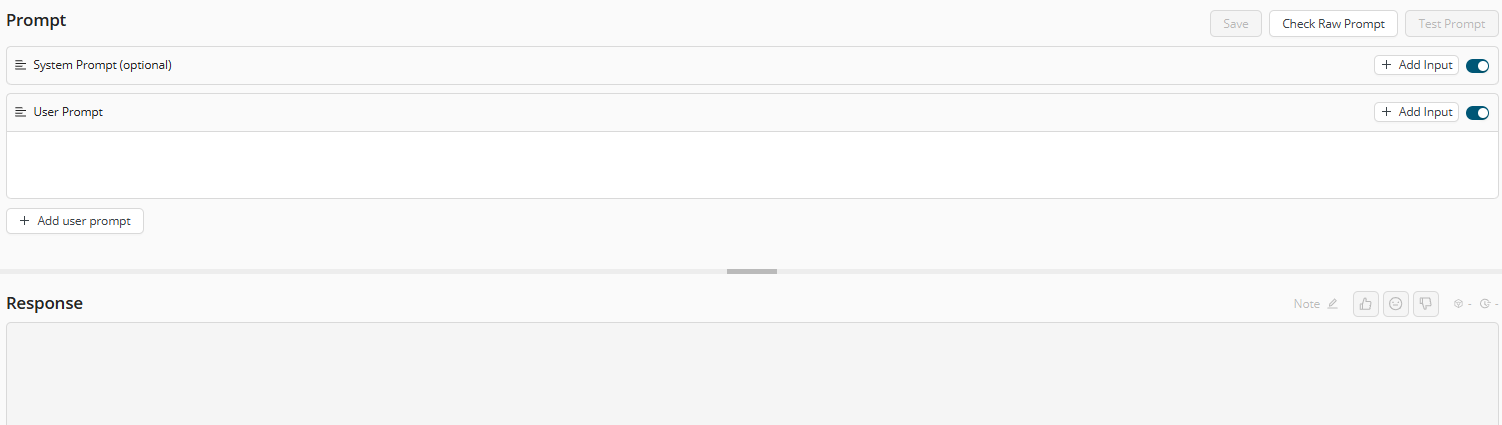

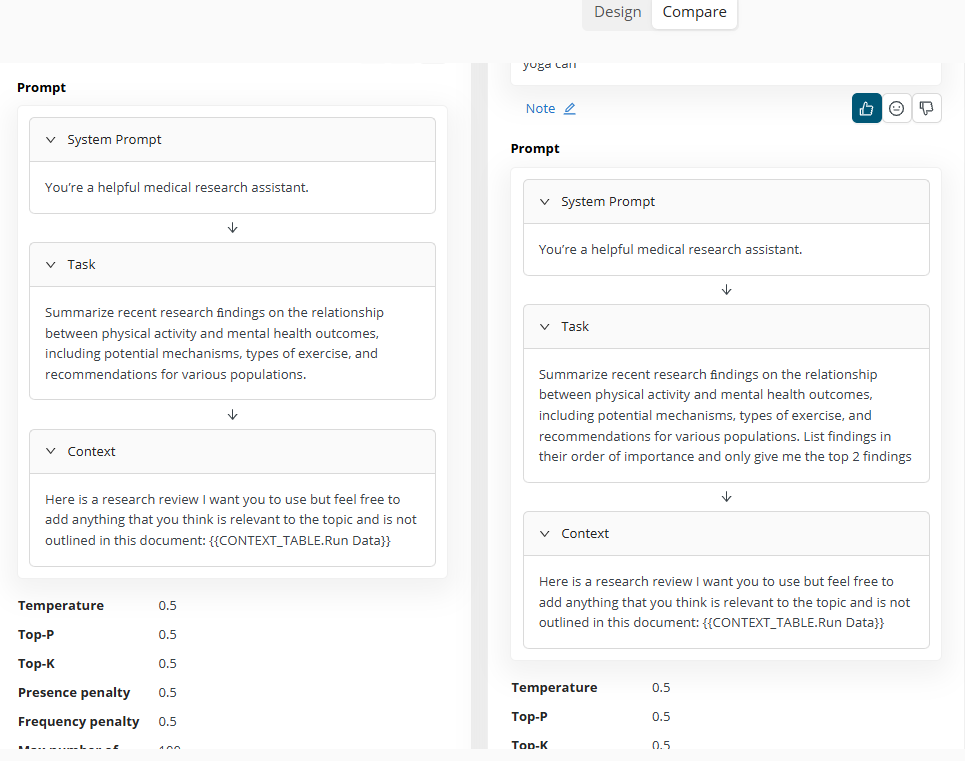

Design view

You can create prompts and iterate on them in Design view. It mainly consists of two sections:

-

editing area, where you write your prompt text, and

-

parameter panel to select the model you want to use and to refine your prompt

Editing area consist of a system prompt and user prompt sections:

-

System prompt define how the AI should behave across all interactions, establishing the tone, ethical guidelines, and general approach. System prompts are the "how" and "why" behind the AI's responses.

-

A user prompt typically contains dynamic instructions that guide the AI in performing specific tasks or answering queries. They're the "what" we want the AI to do in a given moment, i.e. analyzing the sentiment of patient reviews. You can add an arbitrary number of user prompt blocks to logically separate the context and instruction sections. Note that the prompt blocks will be concatenated and sent as a single message to the selected model.

The prompt blocks include several useful tools designed to help you iterate more efficiently on your prompts:

-

Clear the entire content of system- or user prompt

-

Easily reorder user prompt blocks using drag-and-drop

-

Toggle the inclusion of prompt blocks in the prompt inference sent to the LLM during experimentation.

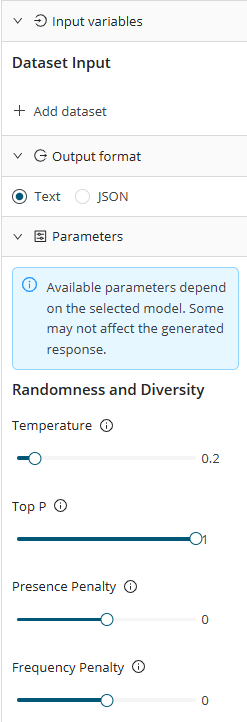

Parameter panel consist of model selection and refinement sections:

- Model selection happens via selecting a connection. A connection represents one or multiple providers, or locally deployed models. After selecting a connection, you can models of various providers can be accessed in the relevant dropdowns. Providers can offer various models - if you want to test your prompt with another model, you can change this parameter anytime within the same Studio. Users can type a model name into the model selector even if it does not exist in the list. Free text models added this way are stored only for the submitted Prompt.

-

Input variables - please scroll down to find a separate section that provides more details about them.

-

Output format - you can specify whether you want the format of the LLM response to be a valid JSON or text. Using a structured output like JSON, you can ensure that the output wil meet your potential downstream processing goals.

-

Settings - you can refine the selected model’s behaviour. Please note that not all parameters are supported by every model and default values vary by the selected provider.

-

Randomness and diversity

-

Temperature: The temperature parameter controls randomness in the response, a measure of how often the model outputs a less likely token. Values greater than 0.5 produce more creative results, appropriate for creative writing and brainstorming, while values less than 0.5 will produce more focused results, suitable for technical articles. For most factual use cases such as data extraction, and truthful Q&A, the temperature of 0 is best.

-

Top-P: P refers to the probability of a given token in an LLM’s response. This setting tells the LLM to sample from the top tokens with probabilities that add up to P, causing the response to focus on the most probable options.

-

Top-K: Like temperature, top-k values can help increase or decrease variance in the results. The top-k parameter will tell the model to limit its results to the most probable tokens (words, for simplicity) in its results. For example, if you set K = 1, the model would output the most likely token at each step of generation. Larger values are more creative, while smaller values are more focused. The general recommendation is to alter one of Temperature or Top-k parameters at a time, not both.

-

-

Length

-

Presence Penalty: The presence penalty parameter controls the occurrence of words or phrases in the response to avoid the appearance of bias. Values below 0.5 ensure the response uses words and phrases in the input prompt (example: presence_penalty=0.8). Values above 0.5 will be less constrained, allowing new terms and concepts to be included in the response.

-

Frequency Penalty: The frequency penalty controls whether words or phrases are repeated. Values above 0.5 reduce the possibility of a specific word or phrase appearing repeatedly (example: frequency_penalty=0.9). Values below 0.1 permit greater word reuse but may sound redundant.

-

Maximum number of tokens: Does not control the length of the output, but a hard cutoff limit for token generation. Ideally you won’t hit this limit often, as your model will stop either when it thinks it’s finished, or when it hits a stop sequence you defined.

-

Stop sequences: Specify sequences of characters that stop the model from generating further tokens. If the model generates a stop sequence that you specify, it will stop generating after that sequence.

-

-

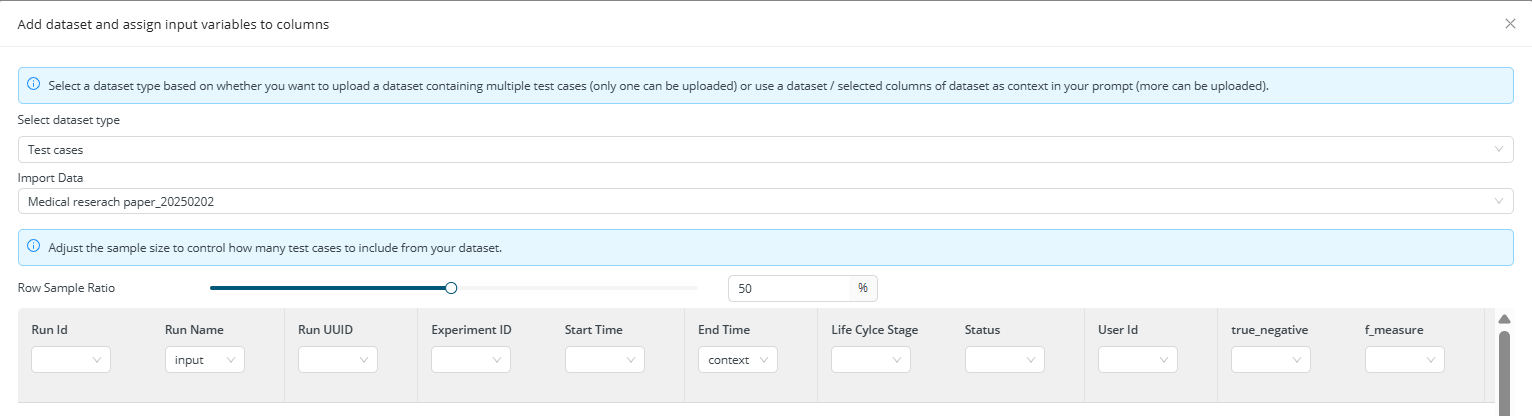

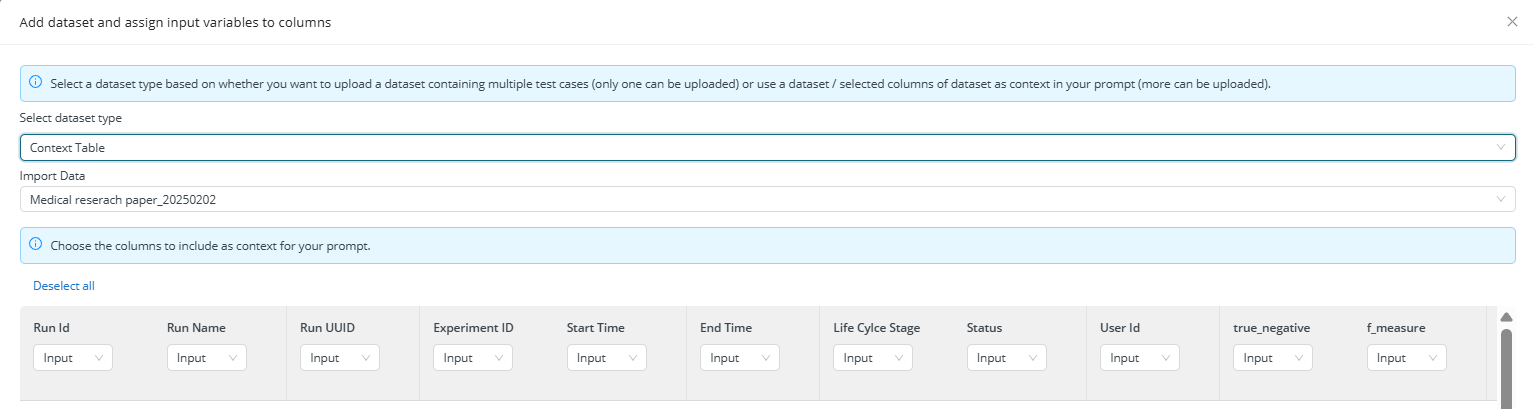

Input variables are placeholders that are included in the static text of the prompt at creation time and getting replaced with text dynamically at run time. Currently they represent entire datasets, one- or multiple columns from a selected dataset and utilize values from those columns. You can pick dataset type depending on whether you want to include multiple test cases, or use an entire dataset or its selected columns as context in your prompt.

-

When adding test cases, you can select which column of the dataset includes the inputs. If your dataset contains separate context for each test case, you can select the appropriate column. Rows of test case type datasets will be sent to the model one by one, values are dynamically sourced from the selected input (and context) columns. You can add only one test case dataset to your Prompt Studio, as comparing the same prompts across different test cases is not meaningful.

-

You can add an arbitrary number of context tables to your prompt, but you can only add each context dataset once to the studio. By default, all columns of the dataset are included in your prompt, but you can remove any that you don't want to use. The entire table content will be sent to the model as text.

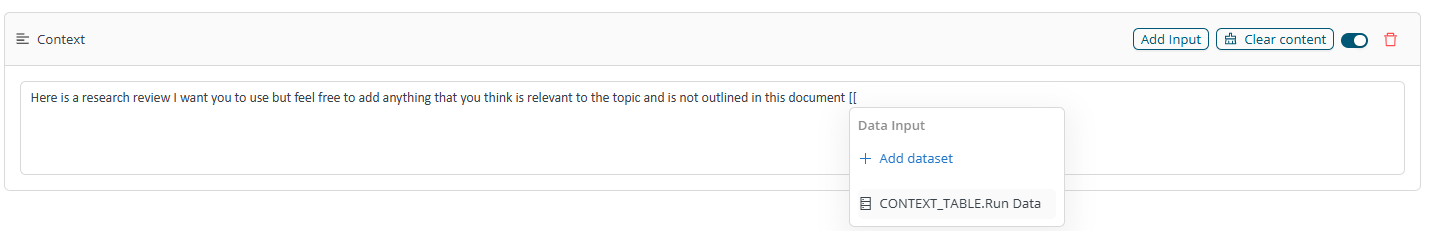

You can add new datasets or include input variables in your prompt in two ways:

-

Clicking on Add input button on the top of each prompt block

-

Using

[[keyboard shortcut

Test and save prompts

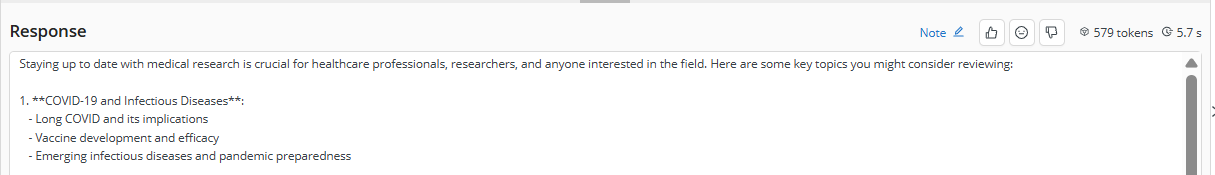

If you think your prompt is ready to be sent to the chosen LLM, by clicking ‘Test Promp’ a call is initiated to it. This will create your first iteration which are stored in browser cache, thus they won't be stored for a longer period of time. Until they are available in the local storage, prompt iterations are accessible- and can be edited using Prompt Studio.

Beside reviewing the response,

-

you can check token and latency information for test iterations,

-

facilitate the identification of iterations by appending custom notes to them, 1 note for each prompt, and

-

You can also rate the response returned by the LLM, indicating whether you are satisfied, neutral, or dissatisfied with it. If you’ve included test cases from a dataset, you can rate the responses for each test case individually. An aggregated score for all test cases in a particular iteration will be displayed on a card in the history panel.

To permanently store your prompt iteration, you can click on Save button which would create a prompt object. You can optionally give a name to your prompt object, overwriting the default name. Once an iteration is saved, it cannot be deleted from the Studio anymore and it can be used in a workflow.

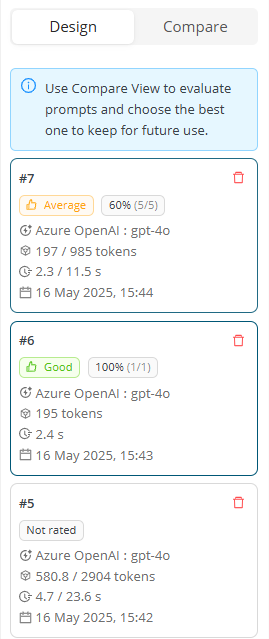

History panel

History panel on the left gets displayed if you have at least one iteration in your Studio. Here you can find every prompt iteration (until they are available in your browser cache) and saved prompt in a chronological order. If you want to get rid of iterations that are no longer relevant for you, you can delete them by clicking on the trash bin icon on the top right corner of each iteration card. History is also your go-to place when you want to select prompts to be compared.

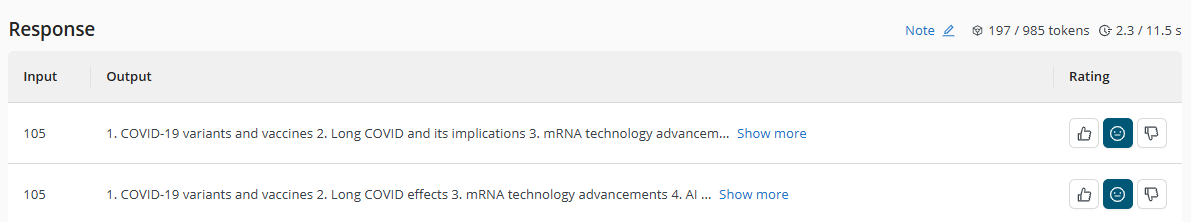

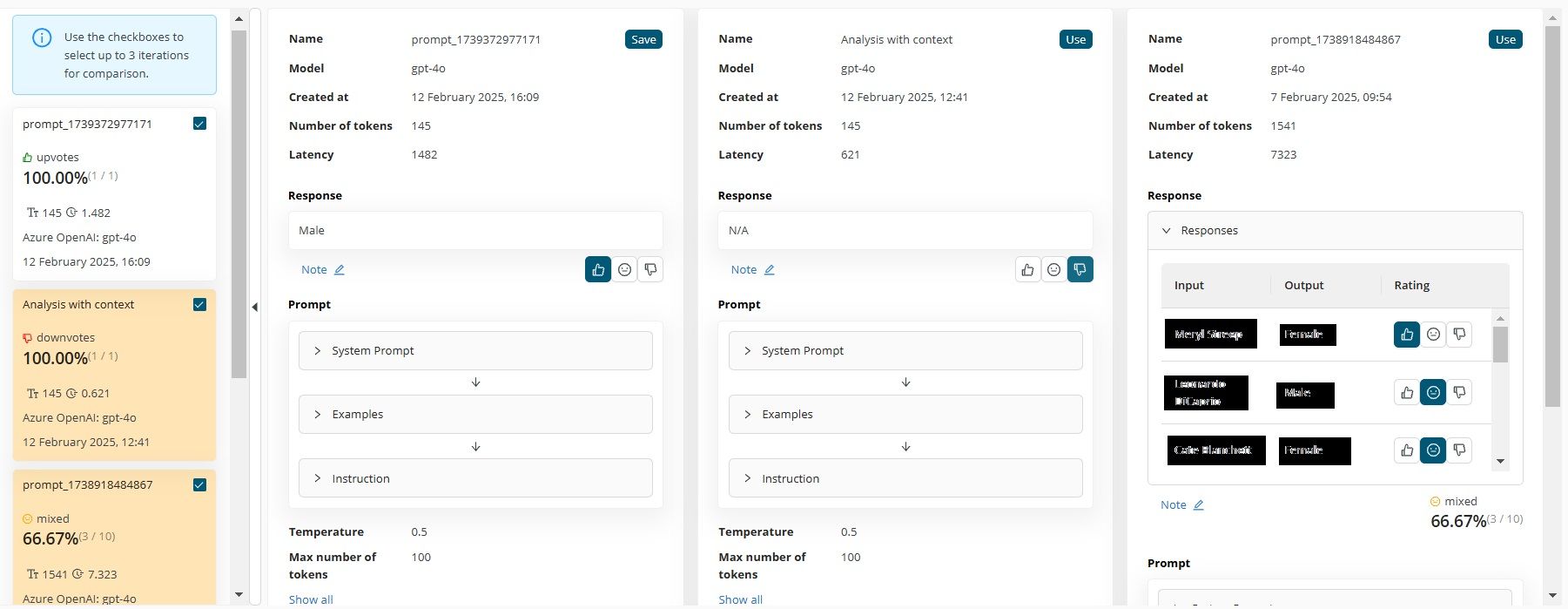

Compare view

In Compare view, you can compare selected prompt iterations based on various parameters (i.e. foundation model, response, latency, token number etc.).

-

You can rate responses, edit previous ratings, and save the ones you want to keep for future reference.

-

You can review the input prompt and refinement settings to assess how your modifications have influenced the output.

Using prompts in workflows

Saved prompts can be used in workflows in two ways:

By clicking on Use button, new workflow will be created

By opening any workflow and selecting the prompt from the project’s prompt studio folder

You can select the prompt you want to use in your workflow, they are listed under the project’s Prompt Studio folders. Prompts can be connected to special prompt operators via their prompt import port. Prompt and Prompt with data operators are your tools to put your prompts into action. By running a prompt operator (or they entire workflow that contains prompt operator) one- or multiple calls are made to the selected LLM.

Prompt parameters are exposed in Workflow Studio but cannot be configured there. New prompts can only be created in Prompt Studio. If you want to modify a given prompt, open Prompt Studio, then create and save a new prompt that you can now include in your workflow.