Deploy an LLM

AI Cloud supports the seamless deployment of Large Language Models (LLMs) from Hugging Face, following an approach similar to that employed in Deploy a Python Model. This new capability allows users to integrate both public and private Hugging Face models into their projects with ease, enabling teams to quickly bring Hugging Face models into production and build sophisticated workflows around them.

By deploying an LLM, you create an endpoint that can be leveraged in multiple ways:

-

Used within Workflow Studio from the Prompt or AI Agent operators to create AI-driven processes.

-

Integrated into Prompt Studio for prompt engineering and testing.

-

Accessed by external systems via API to power AI applications.

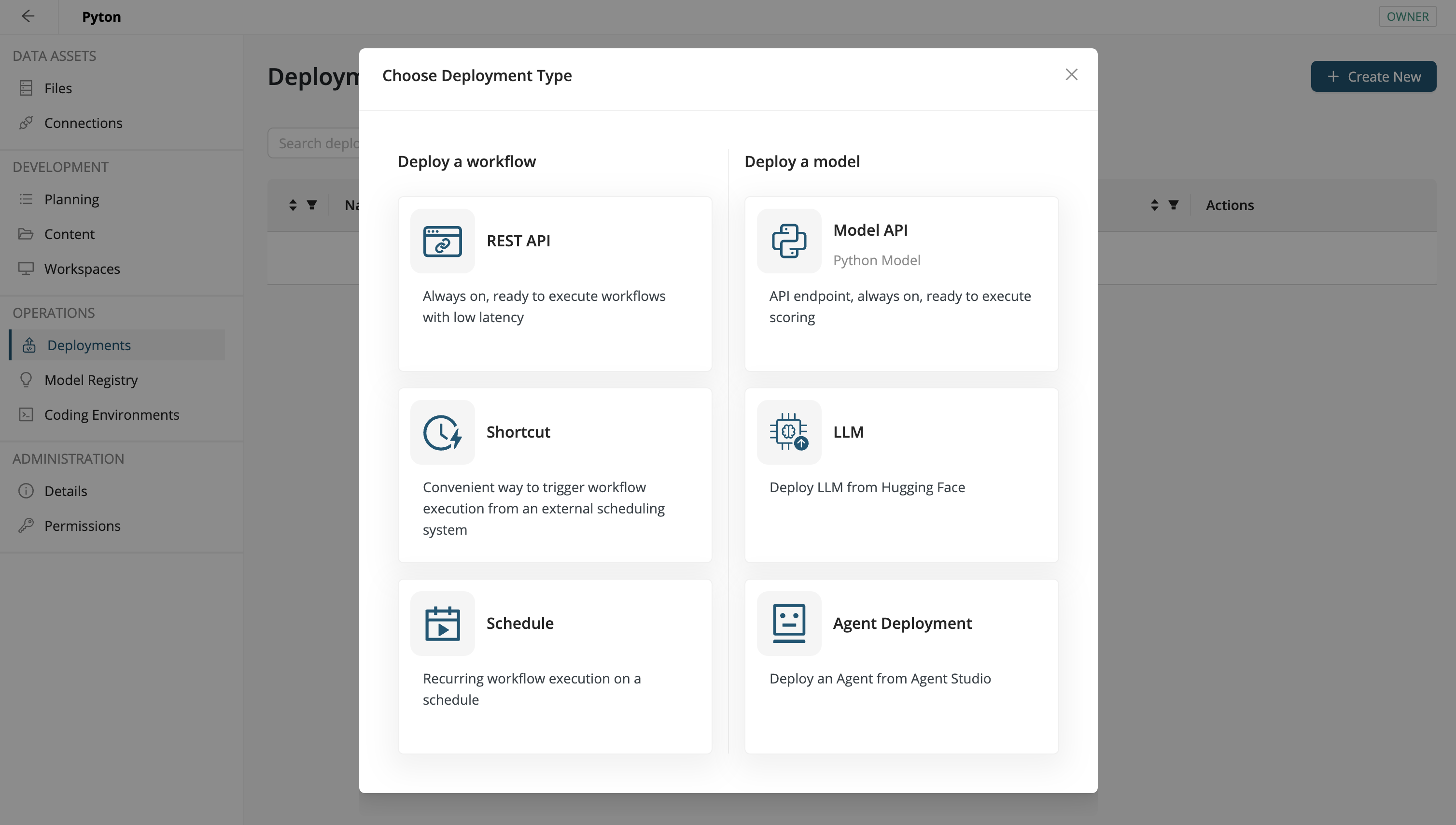

Start by selecting the LLM option under New > Deployment menu.

The Hugging Face model search is embedded directly into the deployment creation page, allowing you to easily find and select models. You can filter your search based on model type or enter a specific model name to locate it. If you wish to deploy private models, you can provide your Hugging Face Access Token, enabling the search to include private models as well.

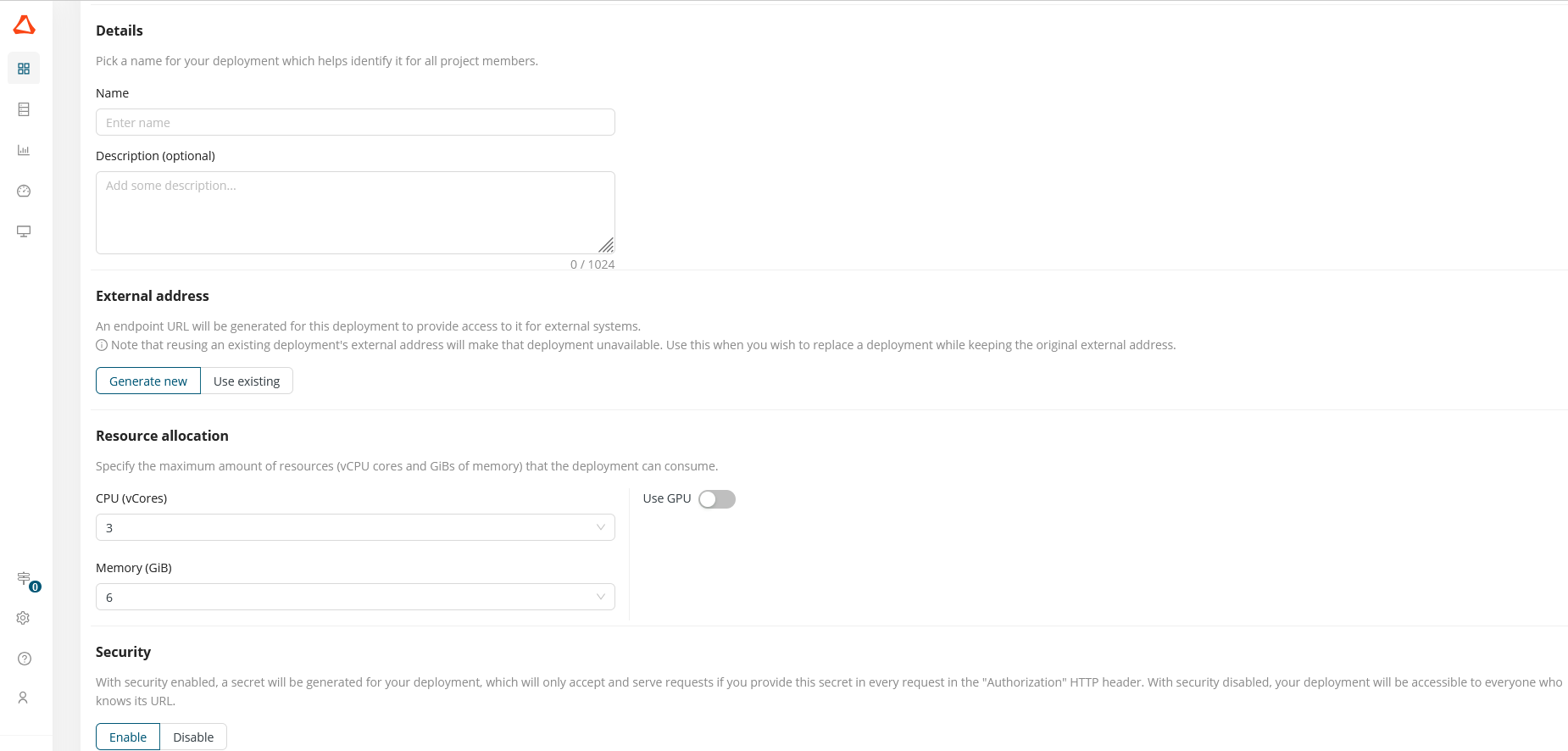

Once you have selected a model, complete the deployment configuration by filling out the required details:

-

Model Name & Description – Provide a meaningful name and description for better organization.

-

Connection – switch this toggle on if you would like to create an LLM Connection for this deployment so that you can use it easily from other parts of the platform, like Prompt Studio or the prompt operators in Workflow Studio. The connection will appear in the Data Assets, where you can share it with additional projects.

-

Resource Allocation – Configure the necessary computational resources, including enabling GPU support and selecting an appropriate instance size based on your model’s requirements.

-

Advanced Settings – Modify additional parameters if needed for your specific model.

The deployment process duration varies depending on the model size. The Deployment page clearly indicates the progress status until the model is fully deployed.

Once the deployment is complete, you can begin interacting with the model:

-

The Test Deployment tab allows you to send requests and receive responses to verify functionality.

-

The deployed LLM, with its LLM connection, becomes available in Prompt Studio, where it can be selected for prompt testing and evaluation.

-

In Workflow Studio, the deployed LLM, with its LLM connection, appears as an option within the Prompt operator, enabling automated workflows that send prompts to the deployed model.

Workflows incorporating the LLM can then be deployed like any other workflow, following the standard process outlined in Deployments.

Autoscaling

Autoscaling is now available for all types of deployments in AI Cloud. This feature allows you to automatically adjust the number of running service instances based on traffic demand — ensuring optimal performance while managing resource usage efficiently.

You can configure autoscaling settings when creating a new deployment via the Deployment creation UI.

Read more: Autoscaling